Built-in functions of DashaScript dialogue design

DashaScript has a number of built-in functions to give you intricate control over the interactions Dasha AI has with your users. The functions are designed in a way to let you create human-like conversational experiences for your users.

The built-in functions are inline and can be called from the executable section (sections do or transitions within a node or a digression). Built-in functions are identified by the # prefix.

node myNode { do { // Calling the "sayText" function. #sayText("Wake up, Neo. The Matrix has you."); #sayText("Do you want to follow the white rabbit?"); wait *; } transitions { // calling messageHasIntent function transition1: goto myNode2 on #messageHasIntent("yes"); transition2: goto myNode2 on #messageHasIntent("no"); } onexit { transition1: do { // calling #log function to console.log #log("He follows the white rabbit."); } transition2: do { // calling #log function to console.log #log("He does not follow the white rabbit."); } } }

Blocking calls

Blocking call (synchronous) functions block execution of further operations until the function is resolved. When it is resolved, the function returns the result and code execution continues.

GPT

answerWithGPT

Answer to the user using GPT with current history and ability to call DSL functions

Parameters

| Name | Type | Description |

|---|---|---|

| prompt | string | A string with GPT prompt |

| promptName | string | A unique name for the prompt made for better response tracking |

| gptOptions | { model: string; openai_apikey: string; } | model - name of model, for example openai/gpt-4, openai_apikey - your APIKEY for OpenAI (see gptOptions) |

| repeatMode? | IRepeatMode | Controls repeating logic, (see IRepeatMode) Default value: override |

| interruptible? | boolean | whether the phrase could be interrupted. Default value: false |

| args | {[x:string]:string; }? | Arguments that should be directly placed into the prompt using {{argName}} construction |

| sayOptions? | { fillerSpeed?:number?; fillerTexts?: string[]?; fillerDelay?: number?; fillerStartDelay?: number?; speed?: number?; interruptDelay?: number?; pauseOnVoice?: boolean?; useGluing?: boolean?; interruptConditions?:(InterruptCondition | InterruptCondition [] )[] | Overrides speed and emotion of saying text, delays before interrupting by human voice and interruptConditions (see SayOptions) |

Returns

Returns an object with fields:

interrupted- true, if GPT was interrupted by userfunctionCalled- true, if function was called during or instead of an answercompleted- true, if GPT has completed answering (and connection to the user was not closed)saidPhrase- GPT’s phrasecalledFunctionNames- name of the function that was called (if none was called returns an empty array)thinking- text that shows GPT’s thinking process prior to response generation (only when Thinking is enabled)

gptOptions

| Name | Type | Description |

|---|---|---|

| ignore_json_output | boolean | If true , ignores GPT’s responses that are formatted as a Json. Responses formatted as a Json might lead to incorrect recognition by bot’s Text-to-Speech. Default value is false. |

| allow_function_name_in_response | boolean | If true , allows names of the functions in GPT’s responses. It helps in cases when GPT calls a function in its response. If set to false - will skip any generated response that contains name of existing function (e.g. function name is “Date” - any response that contains the word “date” will be skipped). Default value is true. |

| stop_sequences | string | If a given sequence is generated - stops generating further response. There can be up to 4 sequences. Different sequences are split by ; sign. Default value is empty. |

| ignore_current_prepend | number | Defines whether received prepend will be passed to GPT’s history. Number must be an integer. If 0 then ignores - otherwise passes to the history. Default value is 0. |

| top_p | number | Defines creativity of GPT’s response. If set to 0.1, GPT will generate responses that fit top-10% of all probability mass. It’s recommended to change this option OR temperature , but not both. Number must be a float. Default value is 0.5. |

| temperature | number | Defines creativity of GPT’s response. Higher values like 0.8 will make the output more random, while lower values like 0.2 will make it more focused and deterministic. It’s recommended to change this option OR top_p, but not both. Number must be a float. Default value is 0.5. |

| max_tokens | number | The maximum number of tokens allowed for the generated answer. Creating a limit with this parameter might lead to only a partial answer. Number must be an integer. Default is set to a full response. |

| presence_penalty | number | Number between -2.0 and 2.0. Positive values penalize new tokens based on whether they appear in the text so far, increasing the model's likelihood to talk about new topics. Number must be a float. Default value is 0. |

| frequency_penalty | number | Number between -2.0 and 2.0. Positive values penalize new tokens based on their existing frequency in the text so far, decreasing the model's likelihood to repeat the same line verbatim. Number must be a float. Default value is 0. |

| prepend | string | Your input phrase that GPT will begin with before sending a generated response. Default value is empty. |

| function_call | string | Controls how the model responds to function calls. none means the model doesn't call a function, and responds to the end-user. auto means the model can pick between an end-user or calling a function. You can also put in a function name. In that case, only the function that you put in will be called. Default value is auto . |

| openai_apikey | string | Here you put in your GPT’s APIKEY. |

| openai_endpoint | string | Here you put in your GPT’s endpoint. For example, you can change it to Azure's-endpoint (if you plan to use Azure OpenAI services). Default is OpenAI’s endpoint. |

| sort_history | string | This option allows to sort answerWithGPT function history by time, so the transcription could be read. We don’t recommend to change it. Default value is true . |

| merge_history | string | This option allows to merge different phrases in one within same turn, allowing coherent transcription. We don’t recommend to change it. Default value is true . |

| history_length | number | Shows how many phrases from bot or user will be passed as a history to GPT. By default full history is passed. |

| log_history | string | Defines will the history be passed to the debug log. Default value is false. |

| max_retries | number | Max number of retries that GPT will be called within the same turn. answerWithGPT retry happens in two cases: 1 - when user says anything while GPT is generating a response. In this case the retry will contain in a history a new phrase from user. 2 - with Thinking enabled, retry will happen if generated response doesn’t fit the required thinking format. Number must be an integer. Default value is 1. |

| retry_temperature_scale | number | Increases temperature on each retry. Default value is 2.0. |

| retry_topp_scale | number | Increases top_p on each retry. Default value is 2.0. |

| save_response_in_history | boolean | Enables/disables saving function for call requests and responses in GPT history. Can be used for service-related requests that are not a part of the conversation. Default value is true |

| provided_functions | string[] | Shows which functions from the current context could be used for a request All functions in the current context are used by default |

| provided_scopes | string[] | Controls which functions are available by specifying allowed scopes. Functions with matching scopes or no scopes will be available. See function scopes for details. Default value is null |

| except_functions | string[] | Explicitly excludes specific functions from being available to GPT, regardless of other inclusion rules. Has highest priority. See excluding functions for details Default value is null |

| use_dynamic_tools | boolean | Enables/disables using tools passed from SDK. Default value is true |

| response_format | string | Defines the structure of the LLM response using JSON Schema. Enables strict validation and typed outputs for more predictable and reliable responses. The schema must be converted to a string using .toString() when passed to LLM functions. |

| allow_incomplete | boolean | If true, includes text currently being spoken by the human in the history. Default value is false |

| seed | number | If specified, the system will make a best effort to sample deterministically such that repeated requests with the same seed and parameters should return the same result. Determinism is not guaranteed. Number must be an integer. Default value is not set. |

| service_tier | string | Specifies the latency tier to use for processing the request. This parameter is relevant for customers subscribed to the scale tier service: • If set to 'auto', and the Project is Scale tier enabled, the system will utilize scale tier credits until they are exhausted • If set to 'auto', and the Project is not Scale tier enabled, the request will be processed using the default service tier with a lower uptime SLA and no latency guarantee • If set to 'default', the request will be processed using the default service tier with a lower uptime SLA and no latency guarantee • If set to 'flex', the request will be processed with the Flex Processing service tier Default value is 'auto'. |

| openrouter_models | string[] | Array of model names for automatic fallback when using OpenRouter models. If the primary model is unavailable, rate-limited, or encounters errors, OpenRouter will automatically try the fallback models in order. Default value is not set. |

| openrouter_provider | string | JSON object as string for fine-grained control over OpenRouter provider selection and routing. Allows customization of load balancing, provider preferences, price controls, and other routing options. Default value is not set. |

| channelIds | string[] | Specifies conversation channels to include in history. If not provided, only the current channel is used. See #getAsyncBlockDescription to retrieve channel IDs. |

Say Options GPT

| Name | Type | Description |

|---|---|---|

| fillerTexts | string[] | An array of strings that will be used as fillers. (see Fillers) |

| fillerSpeed | number | Defines speed of a synthesized filler phrase. Default value as in phrasemap |

| fillerDelay | number | Defines time in sec that is waited after previous filler phrase. Default value is 2 |

| fillerStartDelay | number | Defines time in sec which needed for GPT to give an answer. Default value is 2 |

| speed | number | Defines speed of a synthesized phrase. Default value as in phrasemap |

| interruptDelay | number | Defines time in sec which needed to interrupt pronouncing phrase by human voice. Default value is 2 |

| useGluing | boolean | If true, composite phrasemap phrases will be concatenated before synthesizing. Default value is true (recommended) |

| interruptConditions | (InterruptCondition | InterruptCondition[])[] | Defines triggers in user's utterance to interrupt currently pronouncing phrase. |

| pauseOnVoice | boolean? | Overrides current pauseOnVoice for this call, true for pausing current phrase pronunciation, if the human starts to speak. Default value: null - means that current pauseOnVoice configuration will be used from connectOptions |

| pauseOnVoiceDelay | number? | Delay in seconds before stop speaking (start of fade out) when human is interrupting the agent. Related to pauseOnVoice. Default value: 0 |

| cancellationName | string? | A unique name for this operation that can be used with #cancelToken to programmatically cancel. When cancelled, completed is false. Default value: null |

Example

node gpt { do { // We will be here when user says something, or retry is required var a = #answerWithGPT(`Your name is Mary. You are working in fruit seller contact center. You can only tell customer a price of requested fruit. If you have no price for fruit tell: We have no such fruit `, interruptible:true, gptOptions: { model:"openai/gpt-4", openai_apikey: "YOUR_APIKEY" }, sayOptions: { interruptDelay: 1.0 }); // Call answerWithGPT one more time for passing result to the GPT if (a.functionCalled) { #log("Called a function, retry"); goto retry; } wait *; } transitions { gpt: goto gpt on true; retry: goto gpt; } }

askGPT

Ask GPT to help and form answer to a user around it.

Parameters

| Name | Type | Description |

|---|---|---|

| prompt | string | A string with GPT prompt |

| gptOptions | { model: string; openai_apikey: string; } | model - name of a model (e.g. openai/gpt-4); openai_apikey - your APIKEY for OpenAI (see gptOptions) |

| args | {[x:string]:string; }? | Arguments that should be directly placed into the prompt using {{argName}} construction |

Returns

functionCalled- true, if function was called during or instead of GPT’s responsecompleted- must always return trueresponseText- returns GPT’s response for the questioncalledFunctionNames- name of the function that was called (if none was called returns an empty array)thinking- text that shows GPT’s thinking process prior to response generation

Fillers

Fillers are small texts that are being said by robot to create a display of presence and activity in dialogue. Fillers are very helpful when GPT takes longer time to generate a response.

Fillers are turned on by adding preferred phrases in fillerTexts .

It should look like this.

var a = #answerWithGPT($prompt, interruptible:true, gptOptions: { model:"openai/gpt-4" }, sayOptions: { interruptDelay: 1.0, fillerTexts: [ "Okay...", "Uhm...", "Hm...", ], fillerSpeed: 1.0 });

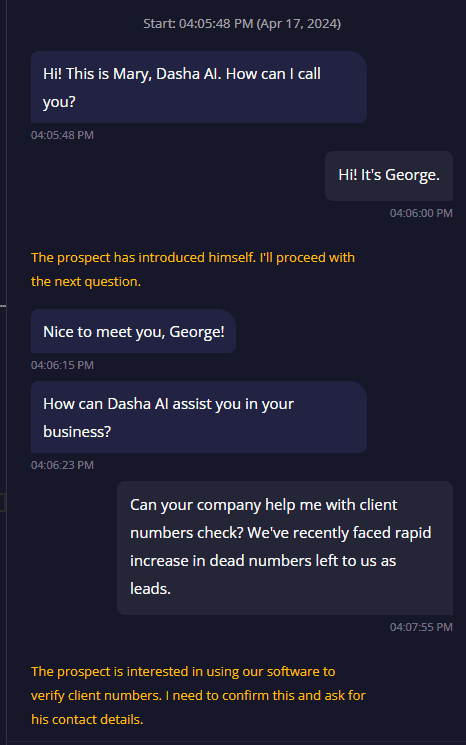

Thinking

Thinking is a feature, aimed at perfecting GPT’s following to the requirements of the prompt, and potentially checking on which stage of the dialogue did GPT fail through debug log.

You can see thinking process with the Inspector tool on our platform. It will look something like this.

Thinking parameters can be changed by gptOptions in askGPT or answerWithGPT functions.

Parameters

| Name | Type | Description |

|---|---|---|

| thinking_enabled | boolean | If true , turns on thinking feature. Default value is false. |

| thinking_choices | number | Defines how many different variations of a response GPT is allowed to generate. The best generated response will be used. Number must be an integer. Default value is 1. |

| thinking_fallback_enabled | boolean | If true, allows to use generated responses from GPT that don’t fit the thinking format (in cases where none of the choices fit it). Thus, answerWithGPT function will be able to give some kind of response to the user. Default value is false. |

| thinking_empty_enabled | boolean | Allows empty thinking messages. Default value is false. |

| thinking_multi_answer_enabled | boolean | Allows thinking-reply-thinking… chain within the same turn. Default value is false. |

getAvailableTools

Gets currently available tools for answerWithGPT/askGPT functions.

Parameters

| Name | Type | Description |

|---|---|---|

| options | { channelIds?: string[]?; use_dynamic_tools?: boolean?; [x: string]: <string | number | boolean | string[]>?; }? | use_dynamic_tools - Include dynamic tools in the return value. Default value is true. channelIds - Define channels from which to retrieve tools. Empty array returns tools from all channels. Default value is the current execution channel. |

Returns

Array of objects:

name: string- Name of the tooldescription: string- Tool descriptionschema: string- Serialized arguments schemachannelId: string?- ID of the channel where the tool is available. Null means available in all channelsscopes: string[]?- Array of scopes assigned to the tool. Null if no scopes are defined for the tool

var tools = #getAvailableTools({ use_dynamic_tools: false }); #assert(tools.length() == 2); #assert(tools[0]?.name == "foo"); #assert(tools[0]?.channelId == ""); #assert(tools[0]?.scopes?.length() == 2); #assert(tools[1]?.name == "bar"); #assert(tools[1]?.channelId == ""); #assert(tools[1]?.scopes is null); set tools = #getAvailableTools(); #assert(tools.length() == 3); #assert(tools[0]?.name == "foo"); #assert(tools[0]?.channelId == ""); #assert(tools[0]?.scopes?.length() == 2); #assert(tools[1]?.name == "bar"); #assert(tools[1]?.channelId == ""); #assert(tools[1]?.scopes is null); #assert(tools[2]?.name == "baz"); #assert(tools[2]?.channelId is null); #assert(tools[2]?.scopes?.length() == 1);

format

The format function allows you to format a source string by incorporating provided arguments, local variables, and context variables. This function operates similarly to the answerWithGPT and askGPT methods, where the arguments (args) are embedded within the prompt.

Parameters

| Name | Type | Description |

|---|---|---|

| source | string | The string to be formatted using {{arg}} placeholders. |

| args | {[x:string]:string; }? | An optional object containing arguments to be inserted into the prompt using {{argName}} placeholders. |

Returns

The formatted string with the provided arguments, local variables, and context variables inserted in place of their respective placeholders.

Example

context { input SomeString: string = "In context"; input SomeString2: string = "In context 2"; input SomeString3: string = "In context 3"; input SomeNumber: number = 42; } start node test { do { var SomeString = "Local Override"; var SomeString2 = "Local Override 2"; var formatted = #format("{{SomeNumber}}: {{SomeString}}/{{SomeString2}}/{{SomeString3}}", { SomeString: "Override" }); #log(formatted); exit; } }

This example demonstrates the formatting process, resulting in the string: 42: Override/Local Override 2/In context 3.

The sequence of replacement is as follows:

Arguments: First, the function checks for the variable in the provided arguments (args).

Scope Variables: If the variable is not found in the arguments, it checks for the variable in the local scope.

Context Variables: If the variable is still not found, it checks for the variable in the context.

Warning: If the variable is not found in any of the above steps, a warning is displayed and the placeholder {{your_variable_name}} remains unchanged.

This ensures that the most relevant and specific variable is used for formatting the string.

Session control

connect (blocking call)

Attempts to establish a connection (starts a call) and returns the result of the attempt. Blocks execution until the result of the connection attempt is received.

#connect(endpoint, options);

Parameters

| Name | Type | Description |

|---|---|---|

| endpoint | string | endpoint to connect to |

| options | ConnectOptions | see Connect Options |

Returns

object

Object depends on connection attempt outcome.

On success: returns an object with the following fields:

| Name | Type | Description |

|---|---|---|

| msgId | string literal | contains value "OpenedSessionChannelMessage" |

| endpoint | string | endpoint with which a connection is established |

On failure: returns an object with the following fields:

| Name | Type | Description |

|---|---|---|

| msgId | string literal | contains value "FailedOpenSessionChannelMessage" |

| reason | string | the reason why the connection could not be established |

| details | string | additional information |

connectSafe (blocking call)

Establishes a connection (starts a call) and returns the result of the attempt. If the connection failed, it stops script execution with the error "Connection failed". Blocks execution until the result of the connection attempt is received.

#connectSafe(endpoint, options);

Parameters

| Name | Type | Description |

|---|---|---|

| endpoint | string | endpoint to connect to |

| options | ConnectOptions | see Connect Options |

Returns

object

Object with the following fields:

| Name | Type | Description |

|---|---|---|

| message | IConnectionResult | message received in response to a connection request |

IConnectionResult is an object with the following fields:

| Name | Type | Description |

|---|---|---|

| msgId | string literal | contains value "OpenedSessionChannelMessage" |

| endpoint | string | endpoint with which a connection is established |

connectOptions

| Name | Type | Description |

|---|---|---|

| humanName | string | Name of the human for transcription #getTranscription, #getFormattedTranscription, #answerWithGPT, #askGPT For example agent in a warm transfer. Default: human |

| agentName | string | Name of the agent for transcription #getTranscription, #getFormattedTranscription, #answerWithGPT, #askGPT For example agentVerification in a warm transfer. Default: ai |

| sip_fromUser | string | Overrides SIP FROM user part field (DID) for an outbound call |

| sip_server | string | Overrides SIP target for an outbound call |

| sip_domain | string | Overrides SIP domain for an outbound call |

| sip_displayName | string | Overrides Dispaly name for an outbound call |

| sip_authUser | string | Overrides username for SIP Authentification |

| sip_authPassword | string | Overrides SIP password |

| sip_transport | "tcp" or "udp" | Overrides trasport protocol for SIP |

| cache_tts_before_connect | boolean | False will disable TTS caching of the phrasemap. It might reduce time of picking up inbound call. Default value: true |

| pauseOnVoice | string | True for pausing current phrase pronunciation, if the human starts to speak. Default value: false |

| pauseOnVoiceDelay | string | Delay in seconds before stop speaking (start of fade out) when human is interrupting the agent. Related to pauseOnVoice. Default value: 0 |

| pauseOnVoiceFadeTime | string | Number in seconds to fade in and fade out on interrupt. Default value: 0.1 |

| sip_diversion_user_0 | string | First diversion header's user part |

| sip_diversion_domain_0 | string | First diversion header's domain |

| sip_diversion_user_1 | string | Second diversion header's user part |

| sip_diversion_domain_1 | string | Second diversion header's domain |

| sip_x_header_name | string | Custom X-headers (e.g., X-Header-Name becomes sip_x_header_name) |

| stt_auto_detect_languages | string | list of language is codes (like en-US,de-DE) for auto detection |

| stt_normalization | string | "true" or "false", default "true" - control of Inverse Text Normalization for Speech To Text |

| stt_punctuation | string | "true" or "false", default "true" - control of punctuation for Speech To Text |

| stt_keyword_boost | string[] | Array of keyword strings for boosting or suppressing recognition probability during the connection. Format: plain word (e.g., "apple") or word with weight "word:weight" where weight ranges from -1 (suppress) to 1 (boost). Note: Some STT providers may not support negative weight values. |

getConnectOptions

Returns last options obtained from the connect operation. Must be used only after a successful #connect() or #connectSafe() call.

#getConnectOptions();

Returns

object

Object with the following fields:

| Field | Type | Description |

|---|---|---|

| options | { [name: string]: string } | Map of connection options #connectOptions where keys are option names and values are their string values |

forward

Emits SIP Refer with Refer-To username equals to the endpoint for SIP-based channels. Nothing for a text-based channel (chat).

#forward(endpoint);

Parameters

| Name | Type | Description |

|---|---|---|

| endpoint | string | endpoint to connect to |

Returns

boolean

Always true.

disconnect (blocking call)

Closes the connection (ends the call). Blocks execution until the result of the attempt to close the connection is received.

#disconnect();

Returns

object

Object with the following fields:

| Name | Type | Description |

|---|---|---|

| hangupMsgId | string | the identifier of the message indicating that the connection was closed |

disableRecognition

Disable speach-to-text (STT) and natural-language-understanding (NLU) in current block or main context (the main graph is implicitly a block too.).

Note that recognition disabling interrupts the calculation of duration of the current session.

#disableRecognition();

Returns

boolean

Always true.

enableRecognition

Enables speach-to-text (STT) and natural-language-understanding (NLU) in current block or main context (the main graph is implicitly a block too.).

Note that enabling of recognition turns on the calculation of duration of the current session.

#enableRecognition();

Returns

boolean

Always true.

noiseControl

The noiseControl function enables or disables background noise in the current channel. It can be called only after establishing a connection using either the connect or connectSafe methods.

Parameters

| Name | Type | Description |

|---|---|---|

| isEnabled | boolean | false disables background noise |

Returns

boolean

Always true

Example

node some_node { do { #noiseControl(false); } }

recordControl

The recordControl function manages the recording of audio in the current channel. It can be invoked only after establishing a connection using either the connect or connectSafe methods.

Parameters

| Name | Type | Description |

|---|---|---|

| state | "pause" | "resume" | "pause" to pause the recording of audio (replacing it with silence to maintain timing), or "resume" to resume recording. |

Returns

boolean

Always true

Example

node some_node { do { #recordControl("pause"); //... do smth. #recordControl("resume"); } }

NLG Control

say (blocking call)

Sends a command to say a phrase from connected phrasemap.

Blocks control until the phrase had been said (or interrupted).

#say(phraseId, args, repeatMode, interruptible, options);

Parameters

| Name | Type | Description |

|---|---|---|

| phraseId | string | phrasemap key of the phrase to be spoken |

| args? | {[x:string]:unknown;} | dynamic phrase arguments that will be used to construct phrase (see NLG doc) |

| repeatMode? | IRepeatMode | control repeating logic, (see IRepeatMode) default value: override |

| interruptible? | boolean | whether the phrase could be interrupted, default value: false |

| options? | {speed?: number; emotion?: string; interruptDelay?: number; interruptConditions?: (InterruptCondition | InterruptCondition[])[]`` } | Override speed and emotion of saying text, delay before interrupting by human voice and interruptConditions (see Say Options) |

Returns

boolean

true if the phrase was said completely, false if it was interrupted.

sayChanneled (blocking call)

#sayChanneled(phraseId, args, channelIds, options);

Channeled version of the builtin function #say.

Similarly to the #say it sends a command to pronounce a phrase from a phrasemap.

Unlike the #say function, #sayChanneled allows you to pronounce phrase in the specific channels provided by channelIds parameter.

If channelIds is not provided or provided as an empty array, then the phrase will be pronounced in all channels.

It does not affect phrase buffer, so it does not have repeatMode parameter.

Blocks control until the phrase is spoken.

Parameters

| Name | Type | Description |

|---|---|---|

| phraseId | string | Phrasemap key of the phrase to be spoken |

| args? | {[x:string]:unknown;} | Dynamic phrase arguments that will be used to construct phrase (see NLG doc) |

| channelIds? | string[] | Channel ids send command to pronounce to. If is not provided or is empty array, the command will be sent to all available channels |

| options? | { speed?: number; emotion?: string; useGluing?: boolean; } | Override speed and emotion of saying text (see Say Options) |

Returns

boolean

Always true as it can not be interrupted.

sayText (blocking call)

Sends a command to say the text. Differs from say in that the text parameter is interpreted literally rather than being resolved via phrasemap.

Blocks control until the phrase had been said (or interrupted).

Warning: it is not recommended to use this function in production.

More details:

This function does not require phrasemap at the cost of no static analysis is performed of whether the phrase is available (in case of prerecorded speech) and no pre-synthesis is performed (in case of synthesized speech) which may cause delays at runtime as well as no caching will be used which cause re-synthesizing on each run. Thus, this function may be used for demonstration purposes allowing the script to be as simple as possible, but it in general it is not recommended to use it in production cases.

#sayText(text, repeatMode, interruptible, options);

Parameters

| Name | Type | Description |

|---|---|---|

| text | string | text to say |

| repeatMode? | IRepeatMode | control repeating logic, (see IRepeatMode) default value: override |

| interruptible? | boolean | whether the phrase could be interrupted, default value: false |

| options? | { speed?: number; emotion?: string; interruptDelay?: number; interruptConditions?: (InterruptCondition | InterruptCondition[])[]`` } | Control speed, emotion of saying text, delay before interrupting by human voice and interruptConditions (see Say Options) |

Returns

boolean

true if the phrase was completely said, false if it was interrupted.

Examples

// just say some text #sayText("Hello, how are you?"); // ---------- // say this text (will not be repeated if you call #repeat #sayText("Hello, how are you?", "ignore"); // ---------- #sayText("Hello, how are you?"); // add to phrase buffer. #sayText("Would you like some coffee?", "complement"); // Call of the #repeat will say "Hello, how are you? Would you like some coffee?" // ---------- // this phrase can be interrupted by user, if the one says something // during `options.interruptDelay` sec (default value is 2 sec) #sayText("Hello, how are you?", interruptible: true); // ---------- // say this phrase fast #sayText("Hello, how are you?", options: { speed: 1.5 }); // ---------- // say this phrase with emotion extracted from text "I love you" // NOTE: you need to enable emotional dasha tts with `conv.audio.tts = "dasha-emotional"; #sayText("Hello, how are you?", options: { emotion: "from text: I love you" }); // ---------- // say this phrase with emotion extracted from text "I love you" and do it slow #sayText("Hello, how are you?", options: { emotion: "from text: I love you", speed: 0.7 }); // ---------- // build phrase from variable #sayText("Hello" + $name + ", how are you?"); // ---------- // this phrase can be interrupted by intent "wait" #sayText("You know, I'm gonna tell you some very long long story...", options: { interruptConditions: [{ intent: "wait" }] } );

sayTextChanneled (blocking call)

Channeled version of the builtin function #sayText.

Similarly to the #sayText it sends a command to pronounce a phrase from a phrasemap.

Unlike the #sayText function, #sayTextChanneled allows you to pronounce phrase in the specific channels provided by channelIds parameter.

If channelIds is not provided or provided as an empty array, then the phrase will be pronounced in all channels.

It does not affect phrase buffer, so it does not have repeatMode parameter.

Also, it cannot be interrupted.

Blocks control until the phrase is spoken.

| Name | Type | Description |

|---|---|---|

| text | string | Text to say |

| channelIds? | string[] | Channel ids send command to pronounce to. If is not provided or is empty array, the command will be sent to all available channels |

| options? | { speed?: number; emotion?: string; } | Control speed and emotion of saying text (see Say Options) |

Returns

boolean

Always true as it can not be interrupted.

repeat (blocking call)

Sends a command to repeat phrases from the phrase buffer (see repeatMode argument of say or sayText).

Due to the variability of phrases (which set in phrasemap) calling the #repeat() may enliven a dialogue and help the dialogue sound more human-like.

Blocks control until the phrase had been said (or interrupted).

#repeat(accuracy, interruptible, options);

Parameters

| Name | Type | Description |

|---|---|---|

| accuracy? | IRepeatAccuracy | Phrasemap sub-key to use (default value: "repeat") |

| interruptible? | boolean | Whether the phrase could be interrupted, default value: false |

| options? | { interruptDelay?: number; } | Control delay before interrupt (see Say Options) |

IRepeatAccuracy is a enum of string literals that denote which phrasemap sub-key will be used to pronounce:

firstrepeatshort

Returns

boolean

true if the phrase was completely said, false if it was interrupted.

Phrase buffer

The phrase buffer is a system buffer used to accumulate phrases that you may pronounce again to repeat them to a user.

Use builtin function #repeat() to repeat phrases accumulated in buffer.

Due to the variability of phrases (which set in phrasemap) calling the #repeat() may enliven a dialogue and help the dialogue sound more human-like.

The content of phrase buffer is controlled by repeatMode argument used to invoke phrases pronunciation (see IRepeatMode).

IRepeatMode

IRepeatMode is a enum of string literals, which control phrase buffer update logic:

override: override phrase buffer. If the phrase is pronounced withrepeatMode:"override", the buffer will be cleaned and the phrase will be appended to empty buffer.complement: append to the phrase buffer. The phrase buffer will not be cleaned and the current phrase will be appended to the buffer.ignore: do not change the phrase buffer. The phrase buffer will not be cleaned and the current phrase will not be appended to the buffer.

Example

Suppose, you have the following phrasemap content:

{ "default": { "voiceInfo": { "lang": "en-US", "speaker": "default" }, "phrases": { "how_are_you": { "first": [{ "text": "How are you doing?" }], "repeat": [{ "text": "How are you today?" }] }, "i_said": [{ "text": "I was saying..." }] } } }

... and the following DSL code:

#sayText("Looks like the weather is good today!"); // default repeatMode is "override" // buffer: ["Looks like the weather is good today!"] #sayText("I am wondering...", repeatMode: "override"); // override buffer content // buffer: ["I am wondering..."] #say("how_are_you", repeatMode: "complement"); // buffer: ["I am wondering...", "how_are_you"] /** ... suppose some dialogue happens here ... */ #say("i_said", repeatMode: "ignore"); // buffer stays the same // buffer: ["I am wondering...", "how_are_you"] #repeat();

, then the last #repeat will actually trigger the following text to be pronounced by Dasha:

AI: "I am wondering..." AI: "How are you today?"

So, the current buffer content was pronounced and (where possible) the "repeat" versions of phrases were used.

preparePhrase

Sends a command to prepare a phrase.

If phrase specified in phrasemap is static (i.e. it does not have any arguments) it is prepared automatically prepared before dialog begins.

Otherwise, if phrase is dynamic and requires some arguments, it can not be prepared in advance and since that it is prepared when calling #say function.

The preparation process requires some time, so if unprepared phrase is being prepared in runtime, it may cause lags in dialogue.

To avoid this you may use the #preparePhrase function before the actual dialogue is started to force prepare some dynamic phrase with particular arguments.

The parameters phraseId and options are the same as for #say function.

Parameters

| Name | Type | Description |

|---|---|---|

| phraseId | string | phrasemap key of the phrase to be spoken |

| options | {emotion?: string; speed?: number;} | Override speed and emotion of saying text (see Say Options) |

Returns

boolean

Always true.

Say Options

NOTE: Different NLG control functions consume different options. See the description of the desired function.

| Name | Type | Description |

|---|---|---|

| speed | number | Defines speed of a synthesized phrase. Default value as in phrasemap |

| interruptDelay | number | Defines time in sec which needed to interrupt pronouncing phrase by human voice. Default value is 2 |

| useGluing | boolean | If true, composite phrasemap phrases will be concatenated before synthesizing. Default value is true (recommended) |

| interruptConditions | (InterruptCondition | InterruptCondition[])[] | Defines triggers in user's utterance to interrupt currently pronouncing phrase. |

| pauseOnVoice | boolean? | Overrides current pauseOnVoice for this call, true for pausing current phrase pronunciation, if the human starts to speak. Default value: null - means that current pauseOnVoice configuration will be used from connectOptions |

| pauseOnVoiceDelay | number? | Delay in seconds before stop speaking (start of fade out) when human is interrupting the agent. Related to pauseOnVoice. Default value: 0 |

| cancellationName | string? | A unique name for this operation that can be used with #cancelToken to programmatically cancel the speech. When cancelled, the function returns false. Default value: null |

NOTE: If interruptDelay set to some specific value, it will be consider as additional trigger. Otherwise, the default behaviour (interrupting by 2-second-long utterance) will be ignored.

type InterruptCondition = // triggers when utterance has positive intention { sentiment: "positive"|"negative"; } | // triggers when utterance contains specific entity { entity: string; value?: string; tag?: string; } | // triggers when utterance contains specific intent { intent: string; sentiment?: "positive"|"negative"; } | // triggers when utterance contains specific intent (same as { intent: string }) string;

Examples

// this phrase can be interrupted if user's utterance contains negative intention, // e.g. "no no no no" #sayText("You know, I'm gonna tell you some very long long story...", options: { interruptConditions: [{ sentiment: "negative" }] } ); // ---------- // this phrase can be interrupted by intent "wait" #sayText("You know, I'm gonna tell you some very long long story...", options: { interruptConditions: [{ intent: "wait" }] } ); // same as above #sayText("You know, I'm gonna tell you some very long long story...", options: { interruptConditions: "wait" } ); // ---------- // this phrase can be interrupted by entity "fruit" of particular value "apple" #sayText("You know, I'm gonna tell you some very long long story...", options: { interruptConditions: [{ entity: "fruit", value: "apple" }] } ); // ---------- // this phrase can be interrupted by // EITHER entity "fruit" (of any value) and intent "wait" // OR entity "fruit" of particular value "apple" #sayText("You know, I'm gonna tell you some very long long story...", options: { interruptConditions: [ [{ entity: "fruit" }, { intent: "wait" }], { entity: "fruit", value: "apple" } ] } );

cancelToken

Cancels all currently executing built-in functions that were started with the matching cancellationName. This allows programmatic cancellation of ongoing speech operations from SDK events or other parts of your dialogue flow.

#cancelToken(name);

Parameters

| Name | Type | Description |

|---|---|---|

| name | string | The cancellation token name to trigger |

Returns

object

An empty object { }.

Behavior

- Cancels all currently executing

#say,#sayText,#sayChanneled,#sayTextChanneled,#repeat, and#answerWithGPToperations that have a matchingcancellationNamein their options - The cancelled function returns

false(orcompleted: falsefor#answerWithGPT) - Cancellation is immediate and stops the speech playback

- Cancellation tokens are isolated per operation - cancelling one operation does not affect subsequent operations using the same token name

Example

// SDK event handler that cancels the greeting when triggered when SDKEvent cancelGreeting do { #cancelToken("greeting"); } start node main { do { #connectSafe($endpoint); // This sayText can be cancelled by sending the "cancelGreeting" SDK event var result = #sayText("Hello, this is a long message that may need to be interrupted...", options: { cancellationName: "greeting" }); if (!result) { #log("Speech was cancelled"); } wait *; } }

NLU Control

messageHasIntent

Checks if the phrase being processed contains the specified intent.

#messageHasIntent(intent, state);

Parameters

| Name | Type | Description |

|---|---|---|

| intent | string | the name of the intent being checked |

| state | string | polarity state, default value: positive |

State defines intent polarity. It works only for intent that allows it. Read here how to create custom polar intents.

Possible state values:

positivenegative

Returns

boolean

true if the phrase contains the specified intent, otherwise it returns false.

Example

digression hello { conditions { on #messageHasIntent("hello"); } do { } }

node can_i_help { do { #sayText("How can I help?"); wait *; } transitions { transfer_money: goto transfer_money on #messageHasIntent("transfer_money"); } }

node transfer_money_confirm { do { #sayText("Do you confirm money transfer?") wait *; } transitions { positive: goto process_transfer on #messageHasIntent("agreement", "positive"); negative: goto transfer_money on #messageHasIntent("agreement", "negative"); } }

messageHasAnyIntent

Checks if the phrase being processed contains any of the specified intents.

#messageHasAnyIntent(intents);

Parameters

| Name | Type | Description |

|---|---|---|

| intents | string[] | array of names of the intents being checked |

Returns

boolean

true if the phrase contains any specified intents, otherwise it returns false.

Example

node transfer_money_confirm { do { #sayText("Do you confirm money transfer?") wait *; } transitions { agree: goto process_transfer on #messageHasAnyIntent(["confirm", "agree"]); disagree: goto cancel_transfer on #messageHasAnyIntent(["cancel", "disagree"]); } }

messageHasSentiment

Checks if the phrase being processed contains a specified sentiment.

Parameters

| Name | Type | Description |

|---|---|---|

| sentiment | string | name of the sentiment being checked |

Possible sentiment values:

positivenegative

Connect sentiment skill in .dashaapp to extract sentiment from messages.

#messageHasSentiment(sentiment);

Returns

boolean

true if the phrase contains the specified sentiment, otherwise it returns false.

Example

node transfer_money_confirm { do { #sayText("Do you confirm money transfer?") wait *; } transitions { agree: goto process_transfer on #messageHasSentiment("positive"); disagree: goto cancel_transfer on #messageHasSentiment("negative"); } }

messageHasData

Checks if the phrase being processed fulfills a given filter condition.

#messageHasData(dataType, filter);

Parameters

| Name | Type | Description |

|---|---|---|

| dataType | string | a name of a skill that generates entities being checked |

| filter | IFilter | a filter that defines the requirements for a phrase |

IFilter is a map from string to boolean or string. The key of the map corresponds to the entity name, boolean value denotes if the entity must be present (true) or absent (false) in the message, string value sets a requirement for a concrete value of the entity.

Example

node transfer_money { do { #sayText("From which accounts you would like to transfer from?") wait *; } transitions { provide_data: goto validate_account on #messageHasData("account"); } onexit { provide_data: do { set $account = #messageGetData("account", { value: true })[0]?.value??""; } } }

Returns

boolean

true if the phrase fulfills the condition, false otherwise.

messageGetData

Extracts entities fulfilling a given condition.

#messageGetData(dataType, filter);

Parameters

| Name | Type | Description |

|---|---|---|

| dataType | string | a name of a skill that generates entities being checked |

| filter | IFilter | a filter that defines the requirements for a phrase |

IFilter is a map from string to boolean or string. The key of the map corresponds to the entity name, boolean value denotes if the entity must be present (true) or absent (false) in the message, string value sets a requirement for a concrete value of the entity.

Returns

object[]

An array of entities extracted from the phrase being processed. Each element of the array returned is an object which keys are strings correspond to entity names and values are string corresponds to entity values.

Example

node transfer_money { do { #sayText("From which accounts you would like to transfer from?") wait *; } transitions { provide_data: goto validate_account on #messageHasData("account"); } onexit { provide_data: do { set $account = #messageGetData("account", { value: true })[0]?.value??""; } } }

node say_food { do { for (var item in #messageGetData("food")) { #sayText(item?.value ?? ""); } } }

node say_food { do { var account = #messageGetData("account", { value: true, tag: true })[0]; if (account.tag == "source") { set $source_account = account?.value??""; } } }

external function resolve_account(info: string): string; node myNode { do { set $account = #messageGetData("account", { value: true })[0]?.value??""; set $account = external foo($account); } transitions {} }

getSentenceType

Get sentence type for current message text.

Read more about the sentence types here.

#getSentenceType();

Returns

string?

Possible values:

- statement - Declarative sentences: Used to make statements or relay information

- request - Imperative sentences: Used to make a command or give a direct instruction

- question - Interrogative sentences: Used to ask a question

- null - type of sentence is not classified (create custom intents or/and entities, then it will be classified)

Example

if (#getSentenceType() == "request") { #sayText("Sorry, I can't do it now", repeatMode: "ignore"); } else if (#getSentenceType() == "question") { #sayText("Sorry, I don't understand the question", repeatMode: "ignore"); } else { #sayText("I don't understand. Repeat please.", repeatMode: "ignore"); }

getMessageText

Returns last processed message text (what user said).

Available only in digression and in onexit section of the transition with condition tag ontext.

Note:

- tag

ontextis default tag in conditions and can be ommited

Returns

String with text said by the user

Example

digression hello { conditions { on #messageHasIntent("hello"); } do { var text = #getMessageText(); #log(text); return; } } node myNode { do { wait *; } transitions { next: goto next on true; } onexit { next: do { var text = #getMessageText(); #log(text); } } } node next { do { exit; } }

Dialogue control

getCurrentTime

#getCurrentTime();

Returns

number

Time in milliseconds since the script launch

getIdleTime

#getIdleTime();

Returns

number

Time in milliseconds since the last phrase/word said by the system or the interlocutor, or since the end of the wait.

startTimer

Starts a timer in the context of the current block.

#startTimer(duration);

Parameters

| Name | Type | Description |

|---|---|---|

| duration | number | timer duration in milliseconds |

Returns

string

The handler of the started timer.

isTimerExpired

Checks if the timer has expired.

#isTimerExpired(instanceId);

Parameters

| Name | Type | Description |

|---|---|---|

| instanceId | string | handler of the checked timer |

Returns

boolean

true if the timer has expired, otherwise false.

getLastVisitTime

#getLastVisitTime(name);

Parameters

| Name | Type | Description |

|---|---|---|

| name | string | name of the target node |

Returns

number

A time of the last visit to the specified node in the current context since the script started. Returns 0 if the node has not been visited.

getVisitCount

#getVisitCount(name);

Parameters

| Name | Type | Description |

|---|---|---|

| name | string | name of the target node |

Returns

number

The number of visits to the specified node in the current context.

waitingMode

Pauses IdleTime calculation (returned by the getIdleTime call) until the specified timeout expires, or until the interlocutor's voice is detected.

#waitingMode(duration);

Parameters

| Name | Type | Description |

|---|---|---|

| duration | number | duration to wait in milliseconds |

Returns

boolean

Always true.

waitForSpeech (blocking call)

Note: For more details, see Complex logic at the start of conversation.

Blocks execution until the voice of the interlocutor is detected, or until the specified timeout expires.

If timeout did expire, than ensures text event on the current (or closest next, if there is no current) segment: if text is missing in the original segment, an empty text will be added to the segment.

The simulated recognition result does not contain intents

#waitForSpeech(duration);

Parameters

| Name | Type | Description |

|---|---|---|

| duration | number | duration to wait in milliseconds |

Returns

boolean

true, if a voice was found, otherwise false.

ensureRSM

Note: this function is intended to enable complex logic at the start of a conversation and should be used in pair with waitForSpeech. For more details, see Complex logic at the start of conversation.

Ensures text event on the current (or closest next, if there is no current) segment: if text is missing in the original segment, an empty text will be added to the segment.

#ensureRSM();

Returns

boolean

Always true.

setVadPauseLength

VAD refers to voice activity detection. Just as it sounds - this is the time that Dasha monitors the channel for additional user speech before responding to the user.

The function #setVadPauseLength can be helpful when a user is asked some question that may require long user answer with long pauses in speech. For example, when one is asked the question "Tell me your address, please?" the user may need some time to phrase their reply. In such a case the VAD pauses should be longer than in the case of regular conversation, for example a simple "yes-no" reply.

It sets the multiplier of the pause detection parameter (see vadPauseDelay parameter) which is used to detect the end of user speech. The default value is 1.0.

You may want to use this if you feel Dasha is too quick to respond to the user.

Parameters

| Name | Type | Description |

|---|---|---|

| multiplier | number | pause detection delay multiplier |

Returns

boolean

Always true.

getAsyncBlockDescription

Gets description of specified async block.

If id is not provided (or null) then the function returns description of the current block.

Parameters

| Name | Type | Description |

|---|---|---|

| id? | string | id of async block |

Returns

{ isSessionOpened: boolean; exist: boolean; id: string; }

isSessionOpened-trueif this async block's session is ongoingexist-trueif this async block existsid- id of this async block

isBlockMessage

Checks if current message is async block message of specified type.

If argument type is not provided, checks if current message is async block message of any type.

Params

| Name | Type | Description |

|---|---|---|

| type? | "Content" | "Terminate" | "Bridge" | "Unbridge" | "Terminated" | checking type of async block |

Returns

boolean

true if current message is async block message of specified type

getAsyncBlockMessage

Gets content of async block message.

Params

None

Returns

AsyncBlockMessage (see Async Block Messages)

sendMessageToAsyncBlock

Sends message from current async block to async block with targetRouteId.

Params

| Name | Type | Description |

|---|---|---|

| targetRouteId | string | id of target async block |

| type | "Content" | "Terminate" | type of async block message |

| content? | unknown | content of async block message |

Returns

boolean

bridgeChannel

Bridges the current async block channel and target async block channels.

If biDirectional == true the current user will hear target users (and vice versa), but target users will not hear each other (unless there are bridges between them already).

Otherwise, the current user will hear the bridged users.

Params

| Name | Type | Description |

|---|---|---|

| targetRouteIds | string[] | ids of target async blocks |

| biDirectional | boolean | if true the established connections will be bidirectional |

Returns

boolean

unbridgeChannel

Unbridges current async block channel and target async block channels.

Params

| Name | Type | Description |

|---|---|---|

| targetRouteIds | string[] | ids of target async blocks |

| biDirectional | boolean | if true the established connections will be bidirectional |

Returns

boolean

Utilities

httpRequest (blocking call)

var response = #httpRequest(url: "https://example.com", method: "POST", body: "Sample data");

var response = #httpRequest(url: "https://example.com", method: "GET");

Performs an HTTP request, blocking the script execution until a response is returned, or a specified timeout is reached.

All requests are sent by the backend part of your application, not from Dasha itself. See the SDK docs to learn how to customize the requests being sent, or disable this feature altogether.

Parameters

| Name | Type | Default | Description |

|---|---|---|---|

url | string | n/a | a url to make a request to |

body | unknown | null (no body) | request body |

method | string | "GET" | http method to use |

headers | { [name: string]: string } | {} | headers to send |

timeout | number | 0 | request timeout in milliseconds; 0 means wait indefinitely |

requestType | "json" | "text" | "json" | if set to "json", serializes body into JSON |

responseType | "json" | "text" | "json" | if set to "json", deserializes the response body as JSON |

queryParams | { [name: string]: string } | {} | query params (?param=value¶m2=value2) of the request |

useSdk | boolean | true | Send an HTTP request using the SDK or directly from the Dasha.ai platform cloud |

Return type

An object with the following fields:

| Name | Type | Description |

|---|---|---|

status | number | HTTP status code |

statusText | string | HTTP status text |

headers | { [name: string]: string } | HTTP response headers |

responseType | "json" | "text" | response type as passed to #httpRequest() |

body | unknown | request body |

rawBody | string | unparsed request body |

Example

start node root { do { // Establishing a safe connection to user's phone #connectSafe($phone); // Waiting for 1 second to say the welcome message #waitForSpeech(1000); // Welcome message #sayText("Hi, how can i help you today?"); var url = "https://ptsv2.com/t/vwlcn-1639739558/post"; var body = "Dasha has responded"; var response = #httpRequest(url: url, method: "POST", body: body); #log(response); wait *; // Wating for reply } transitions { // Here you give directions to which nodes the conversation will go } }

random

#random();

Returns

number

A pseudo-random number between 0 and 1 (including 0 but not including 1) using a uniform distribution of the random variable.

sendDTMF

Sends DTMF message, if a current channel is a SIP.

Parameters

| Name | Type | Description |

|---|---|---|

| code | string | The dtmf message for sending |

getDTMF

Returns DTMF message(buttons press on phone), if the last received message is DTMF. Actual only for SIP channels.

Actual only when you are handling messages with tag onprotocol

Returns

Returns string - if we have received DTMF now, null otherwise

Example

digression dtmf { conditions { on #getDTMF() is not null tags: onprotocol; } do { var data = #getDTMF(); #log(data); return; } }

parseInt

Converts a string to an integer.

If a string represents float number it will be floored to an integer.

If a string can not be converted into a number, the function will return NaN.

Parameters

| Name | Type | Description |

|---|---|---|

| target | string | A string to convert into a number |

Returns

number

An integer number if string could be converted into a number, NaN otherwise.

Example

node some_node { do { var num1 = #parseInt("10"); // number 10 var num2 = #parseInt("10.5280"); // number 10 var num3 = #parseInt("123aaa"); // NaN var num4 = #parseInt("aaa123"); // NaN } }

parseFloat

Converts a string to a floating-point number.

If a string can not be converted into a number, the function will return NaN.

Parameters

| Name | Type | Description |

|---|---|---|

| target | string | A string that contains a floating-point number |

Returns

number

A floating-point number if string could be converted into a number, NaN otherwise.

Example

node some_node { do { var num1 = #parseFloat("10"); // number 10 var num2 = #parseFloat("10.5280"); // number 10.5280 var num3 = #parseFloat("123.5aaa"); // NaN var num4 = #parseFloat("aaa123.5"); // NaN } }

stringify

Converts any value to a string.

Parameters

| Name | Type | Description |

|---|---|---|

| target | unknown | An object to convert |

Returns

string

Stringified object

Example

node some_node { do { var pi = 3.1415; #sayText("the value of pi is " + #stringify(pi)); var obj = {a: 1}; #log("obj = " + #stringify(obj)); } }

log

Outputs message to the application output. The message may be any object that could be created in DSL.

Parameters

| Name | Type | Description |

|---|---|---|

| target | unknown | An object to log |

Transcription

getTranscription

Returns a dialogue transcription as an array of objects.

Parameters

| Name | Type | Description |

|---|---|---|

| options | TranscriptionOptions | Transcription options |

Returns

{ source: "human"|"ai"; text: string; name: string?; tool: { name: string; args: unknown?; result: unknown?; callId: string; executionTime: number; }?; }[]

Where name is a humanName option of connectOptions or null if not set.

getFormattedTranscription

Returns a dialogue transcription as a string

Parameters

| Name | Type | Description |

|---|---|---|

| options | TranscriptionOptions | Transcription options |

Returns

- If

humanNamein #connect/#connectSafe not set

ai: AI Sentence human: Human Sentence

- If

humanNamein #connect/#connectSafe is set toagent

ai: AI sentence agent: Agent sentence

- If

include_tool_callsis set totrue

ai: AI sentence human: Human sentence ai: functionName({"arg1":val1,"arg2":val2}) => res1

- Warm transfer with

humanNameset for the agent connection and #disableRecognition() was not called, and optionchannelIdsis set to[]

ai: AI sentence human: Human sentence .... agent: Agent sentence human: Human sentence ...

TranscriptionOptions

| Name | Type | Description |

|---|---|---|

| channelIds | string[]? | Optional, for getting transcription in multiple connections. Empty array - get all. null - current connection |

| history_length | number? | Number of phrases by the end of the logged history |

| include_tool_calls | boolean? | Optional, includes tool calls in the transcription (default value: false) |

| allow_incomplete | boolean? | Optional, includes text currently being spoken by the human in the transcription (default value: false) |

| include_system_messages | boolean? | Optional, include system messages (default value: true) |

addHistoryMessage

Adds a message to the end of the current conversation history.

#addHistoryMessage(message);

Parameters

| Name | Type | Description |

|---|---|---|

| message | { source: "system"; text: string; channelId?: string?; } | { source: "ai"; name?: string?; text: string; thinking?: string?; channelId?: string?; } | { source: "human"; text: string; name?: string?; channelId?: string?; } | Message to add to the history. - source - Source of the message: "system", "ai", or "human" - text - Message text - name - Name of the message source (for "ai" and "human") - thinking - Thinking process text (for "ai") - channelId - ID of the channel where the message should be added |

Returns

{ uniqueId: string; }

An object containing a unique identifier for the added message.

Example

// Add system message to history #addHistoryMessage({ source: "system", text: "Call started at 15:30" }); // Add AI message with thinking to history #addHistoryMessage({ source: "ai", name: "assistant", text: "I can help with your account balance inquiry", thinking: "The user wants to know their account balance" }); // Add human message to history #addHistoryMessage({ source: "human", name: "customer", text: "What's my current account balance?" });

changeLanguage

Change language and speaker for current async block. If you use #say then you must contain the provided speaker as a section in phrasemap.

#changeLanguage(language, speaker, speed, emotion, options);

Parameters

| Name | Type | Description |

|---|---|---|

| language | string | Language code to change to (e.g., "en-US", "es-ES") |

| speaker | string | Speaker identifier to use for the new language |

| speed? | number | Optional speed setting for the new speaker. Default: null |

| emotion? | string | Optional emotion setting for the new speaker. Default: null |

| options? | {[x:string]:unknown; } | Optional additional options for the language change. Default: null |

Returns

boolean

Always true.

Example

node language_switch { do { // Switch to Spanish with a specific speaker #changeLanguage("es-ES", "elevenlabs/model_id/voice_id"); #say("greeting_spanish"); // Switch back to English with custom speed #changeLanguage("en-US", "elevenlabs/model_id/voice_id", 1.2); #say("greeting_english"); // Switch to French with custom TTS endpoint and API key // Request will go to `https://your-custom-tts-endpoint.com/v1/text-to-speech/voice_id` // or to `https://your-custom-tts-endpoint.com/v1/text-to-speech/voice_id/stream` for ElevenLabs #changeLanguage("fr-FR", "elevenlabs/model_id/voice_id", options: { apikey: "your-custom-api-key", endpoint: "https://your-custom-tts-endpoint.com" }); // Switch to French with custom API key only #changeLanguage("fr-FR", "elevenlabs/model_id/voice_id", options: { apikey: "your-custom-api-key" }); #sayText("Bonjour, comment allez-vous?"); } }

Provider-Specific Options

These options can also be configured in phrasemap.json for persistent settings.

ElevenLabs Options

| Option | Type | Description |

|---|---|---|

similarity_boost | number | Voice similarity boost setting. See ElevenLabs docs |

stability | number | Voice stability setting. See ElevenLabs docs |

style | number | Voice style setting. See ElevenLabs docs |

use_speaker_boost | boolean | Enable speaker boost. See ElevenLabs docs |

apikey | string | Custom API key for ElevenLabs service |

endpoint | string | Custom endpoint URL for ElevenLabs service |

Cartesia Options

| Option | Type | Description |

|---|---|---|

emotions | string[] | List of emotion strings, e.g., ["surprise:high", "sadness:low"]. See Cartesia docs |

embedding | number[] | List of floats (size = 192) with voice embedding |

apikey | string | Custom API key for Cartesia service |

endpoint | string | Custom endpoint URL for Cartesia service |

Inworld Options

| Option | Type | Description |

|---|---|---|

pitch | number | Pitch shift in range [-5, 5] |

temperature | number | Model temperature in range [0, 2] |

apikey | string | Custom API key for Inworld service |

endpoint | string | Custom endpoint URL for Inworld service |